The Panshi cloud operating system kernel is designed for the container runtime, and has good security and compatibility design.

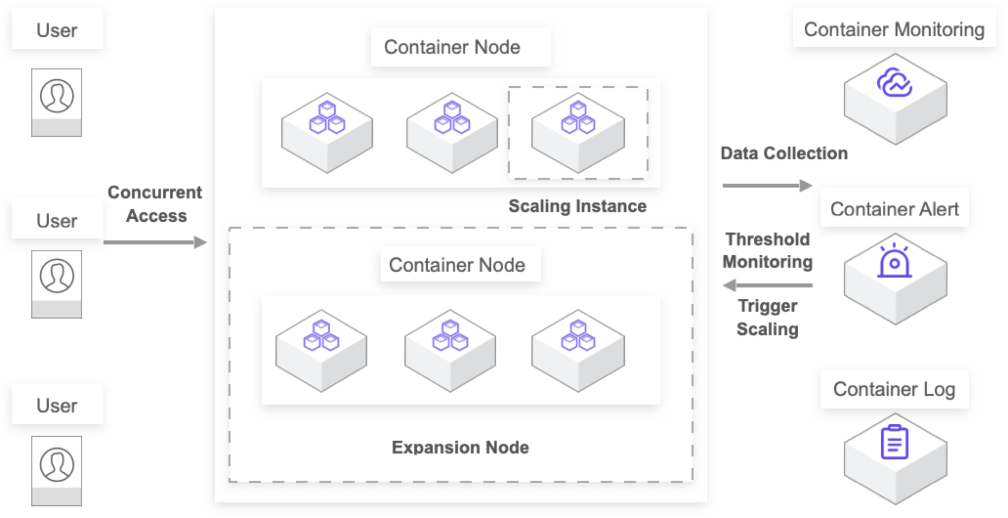

According to the access traffic, the business policy is scaled.

Kubernetes community is fully version-compatible, flexible deployment, and independent of the underlying cloud platform.

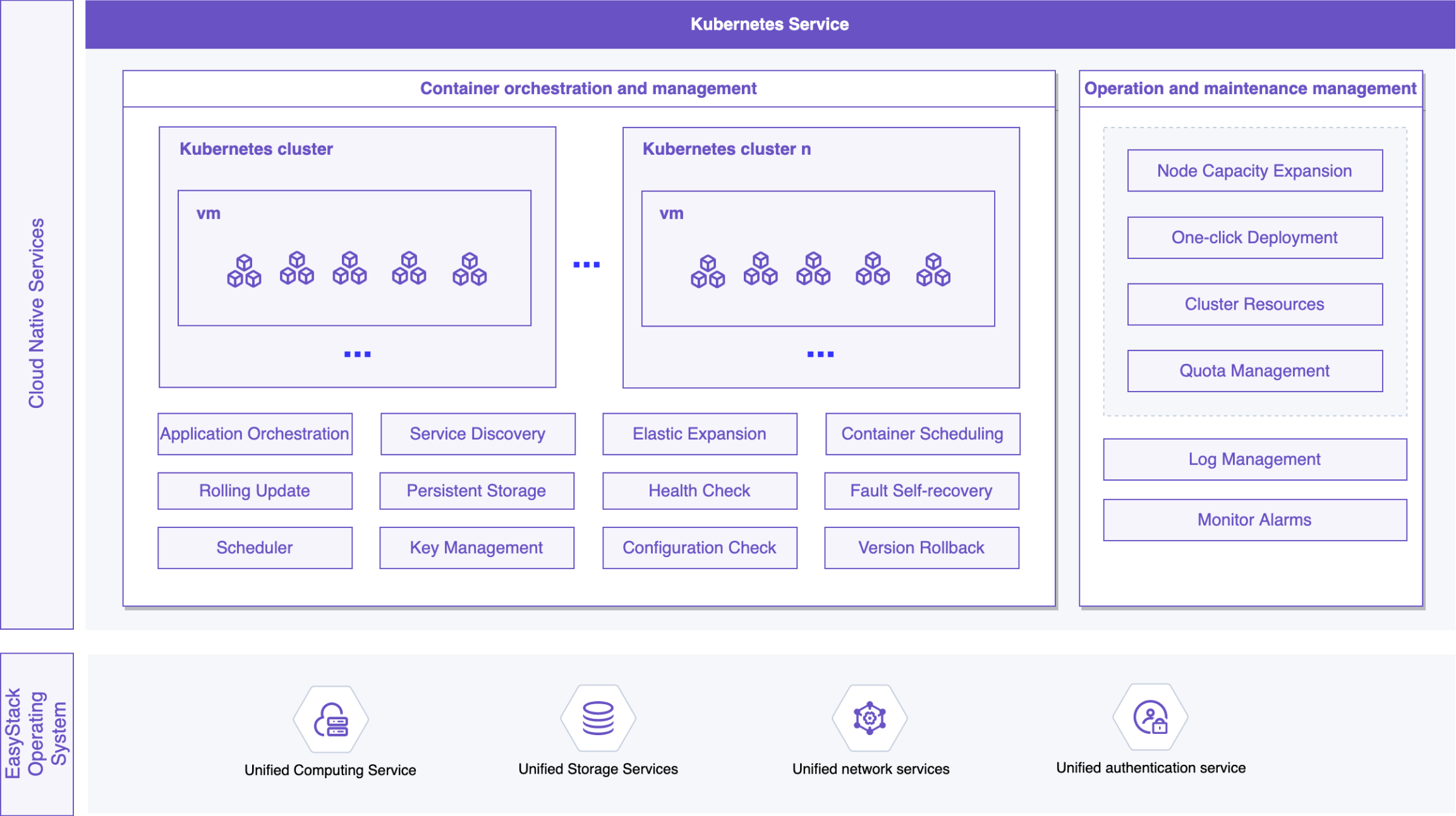

With unified multi-cluster management as the core, it supports the use of underlying cloud infrastructure resources to deploy Kubernetes clusters and quickly complete node initialization. Depending on the use case, you can choose to deploy Kubernetes clusters of different sizes, such as a test environment with a single master node or a highly available production environment with multiple Master nodes. Each tenant can create multiple Kubernetes clusters, and support management operations such as cluster expansion, monitoring, and deletion.

Professional support and services are provided through CNCF Global Certified Kubernetes Service Provider (KCSP) and Kubernetes Training Partner (KTP) conformance verification.

Through the cloud provider, Kubernetes can directly use the cloud platform to realize the functions of persistent volume, load balancing, network routing, DNS resolution and horizontal expansion.

Supports multiple container deployment methods by specifying images, Chart templates, Yaml imports, automated pipelines, and more.

It supports the deployment of clusters across AZs, and the distribution of cluster nodes and multiple AZs achieve high availability disaster recovery.

The cluster control plane supports 3 Master HA high availability to ensure service continuity.

Suitable for x86, Arm computing architecture mainstream chips, such as Intel, Kunpeng, Phytium.

Container services can be integrated and deployed with cloud infrastructure to seamlessly connect with the capabilities of the underlying cloud platform.

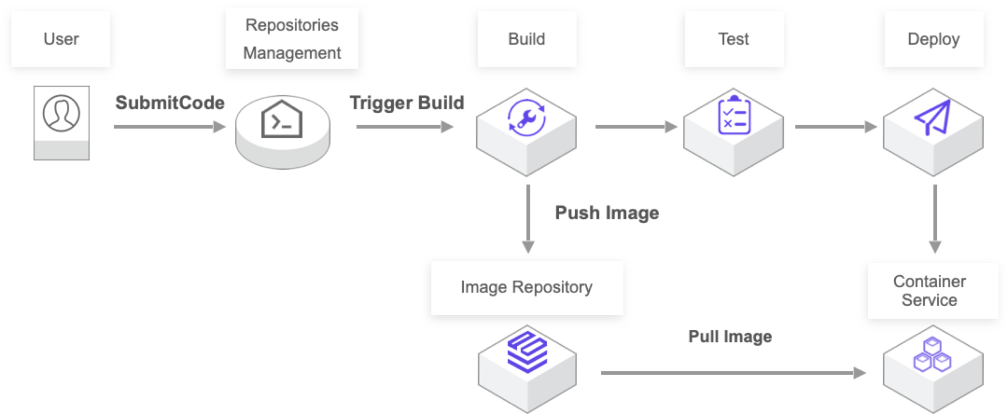

With DevOps services, the platform helps you automatically complete code compilation, image construction, testing, containerized deployment and so on based on the code source, realizing a one-stop containerized delivery process, greatly improving the efficiency of software release and reducing the risk of release.

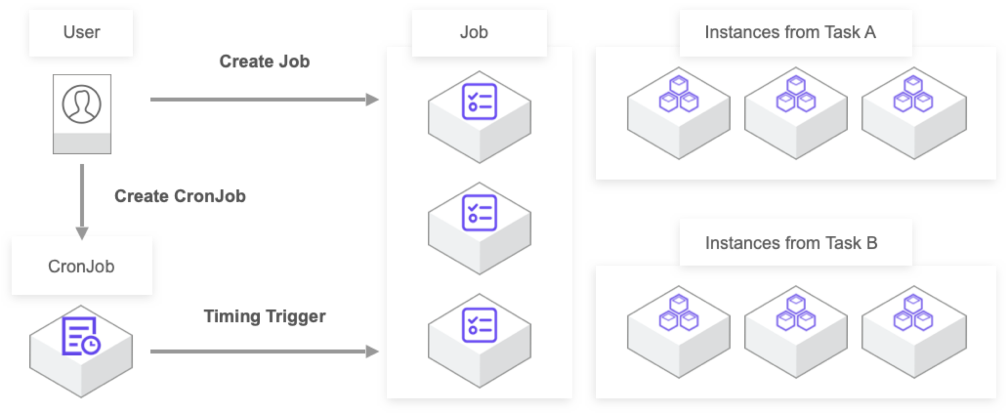

You can create task-oriented workloads that execute sequentially or in parallel in a Kubernetes container cluster, supporting short one-time tasks and periodic tasks. Short tasks that run once and can be executed when they are deployed. Periodic tasks can run short tasks at a specified time period (for example, every day at 8 am), and can perform periodic time synchronization, data backup, and so on.

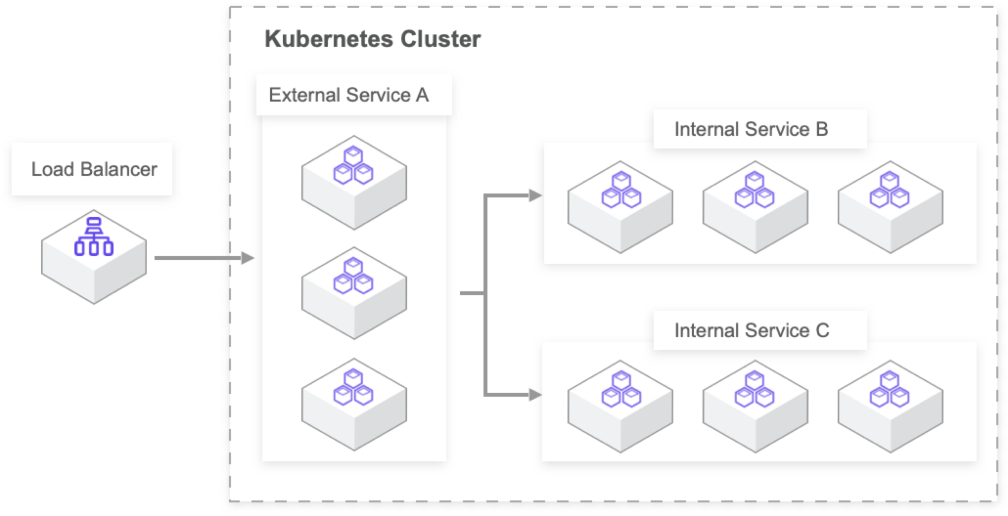

Microservice architecture is suitable for building complex applications. It separates a monolithic application into multiple manageable microservices from different dimensions, and each service can be deployed and extended independently. The application is split through microservices, and you only focus on each microservice iteration, with the platform providing the scheduling, orchestration, deployment, and release capabilities.

According to the access traffic, the business policy expansion and expansion are carried out to avoid system failure caused by the surge in traffic and the waste of idle resources. When the average CPU and memory load of a set of Kubernetes Pods corresponding to the workload exceeds the threshold, the elastic scaling at the Pod level can be achieved. When the cluster resources are insufficient, the cluster nodes can be quickly expanded to carry more containers.